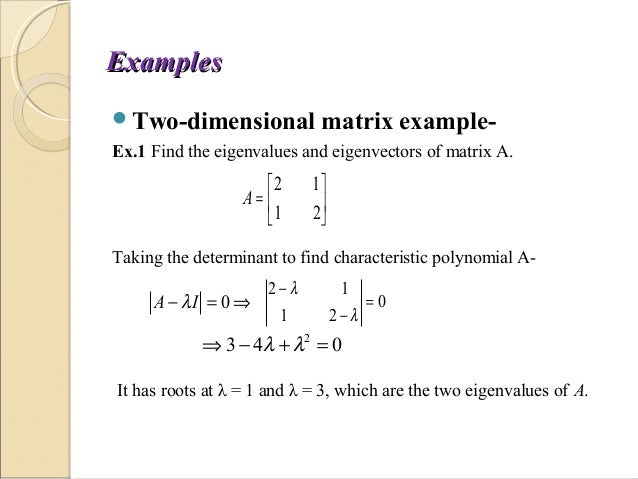

"dof" degrees of freedom in the model for this number of factors Output from VSS is a ame with n (number of factors to try) rows and 21 elements: VSS.scree(cor(mydata), main ="scree plot") To compare the VSS result to a scree test, find the correlation matrix and then call VSS.scree which finds and plots the eigen values of the principal components of the correlation matrix. My.vss <- VSS(mydata,n=8,rotate="none",diagonal=FALSE.) #compares up to 8 factors In R, for a data matrix "my.data" issue the call: To use this code within R, simply copy the following command into R to load VSS and associated functions: For an introduction in R for psychologists, see tutorials by Baron and Li or Revelle. This is a preliminary release, with further modifications to make it a package within the R system. With the availability of an extremely powerful public domain statistical package R, we now release R code for calculating VSS. VSS for a given complexity will tend to peak at the optimal (most interpretable) number of factors ( Revelle and Rocklin, 1979).Īlthough originally written in Fortran for main frame computers, VSS has been adapted to micro computers (e.g., Macintosh OS 6-9) using Pascal. The VSS criterion compares the fit of the simplified model to the original correlations: VSS = 1 -sumsquares(r*)/sumsquares(r) where R* is the residual matrix R* = R - SS' and r* and r are the elements of R* and R respectively. C (or complexity) is a parameter of the model and may vary from 1 to the number of factors. S is composed of just the c greatest (in absolute value) loadings for each variable. Very Simple Structure operationalizes this tendency by comparing the original correlation matrix to that reproduced by a simplified version (S) of the original factor matrix (F). Most users of factor analysis tend to interpret factor output by focusing their attention on the largest loadings for every variable and ignoring the smaller ones. (Simulations suggests it will work fine if the complexities of some of the items are no more than 2). VSS, while very simple to understand, will not work very well if the data are very factorially complex. Extracting interpretable factors means that the number of factors reflects the investigators creativity more than the data. The eigen value of 1 rule, although the default for many programs, seems to be a rough way of dividing the number of variables by 3. The scree test is quite appealling but can lead to differences of interpretation as to when the scree "breaks". Parallel analysis is partially sensitive to sample size in that for large samples the eigen values of random factors will be very small. Using either the chi square test or the change in square test is, of course, sensitive to the number of subjects and leads to the nonsensical condition that if one wants to find many factors, one simply runs more subjects. VSS: Using the Very Simple Structure Criterion.Įach of the procedures has its advantages and disadvantages.Meaning: Extracting factors as long as they are interpetable.Eigen Value of 1 rule: Extracting principal components until the eigen value Scree Test: Plotting the magnitude of the successive eigen values and applying the scree test (a sudden drop in eigen values analogous to the change in slope seen when scrambling up the talus slope of a mountain and approaching the rock face).Parallel Analysis: Extracting factors until the eigen values of the real data are less than the corresponding eigen values of a random data set of the same size.

Chi Square rule #2: Extracting factors until the change in chi square from factor n to factor n+1 is not significant.

Principal Components analysis and MultiDimensional Scaling are alternative data reduction and description models.ĭetermining the appropriate number of factors from a factor analysis is perhaps one of the greatest challenges in factor analysis. The classic factor model is that elements of a correlation matrix (R) may be approximated by the product of a factor matrix (F) times the transpose of F (F') plus a diagonal matrix of uniquenesses U²:īecause the rank k of the F matrix is much less than rank n of the original correlation matrix, factor analysis is a fundamental part of scale development and data simplification in many fields. Determining the optimal number of interpretable factors by using Very Simple Structure

0 kommentar(er)

0 kommentar(er)